Abstract

Modern botnets are no longer rudimentary scripts with static payloads. Today, they are distributed, intelligent, and adaptive systems capable of learning from their target environments. This article dissects the cognitive mechanics of malicious crawlers—from reconnaissance to real-time evasion—and explains how ShieldsGuard uses adaptive traffic engineering and AI-powered anomaly detection to dismantle these threats before they mature into full-scale attacks.

Phase 1: Reconnaissance – Observing Without Provoking

Every intelligent attack begins with silent observation. Rather than triggering alerts, crawlers collect information through low-volume traffic that mimics legitimate users.

What They Observe:

- Which endpoints are accessible (e.g.,

/api/auth,/login,/checkout) - How the system responds to malformed or parameterized requests

- Rate limits, timeout policies, and redirect behavior

- CAPTCHA triggers and JavaScript challenges

- TLS fingerprinting and handshake latency

ShieldsGuard’s detection engine uses anomaly detection and passive telemetry to flag reconnaissance attempts early—before they escalate into active probing.

Phase 2: Probing and Learning – Algorithmic Behavior Modeling

Once reconnaissance yields insights, the crawler shifts into probing mode. In this phase, bots begin testing the system’s thresholds through more deliberate requests, often distributed across diverse IPs and ASNs.

Common Tactics:

- Emulating real browsers via headless tools (e.g., Puppeteer, Selenium)

- Alternating headers and parameters to evade WAF rules

- Triggering edge cases across multiple endpoints

- Timing-based probes to detect caching or rate-limiting logic

ShieldsGuard monitors these interactions using a real-time behavioral stack. It evaluates consistency between declared client behavior and actual browser execution, flagging discrepancies as indicators of automation.

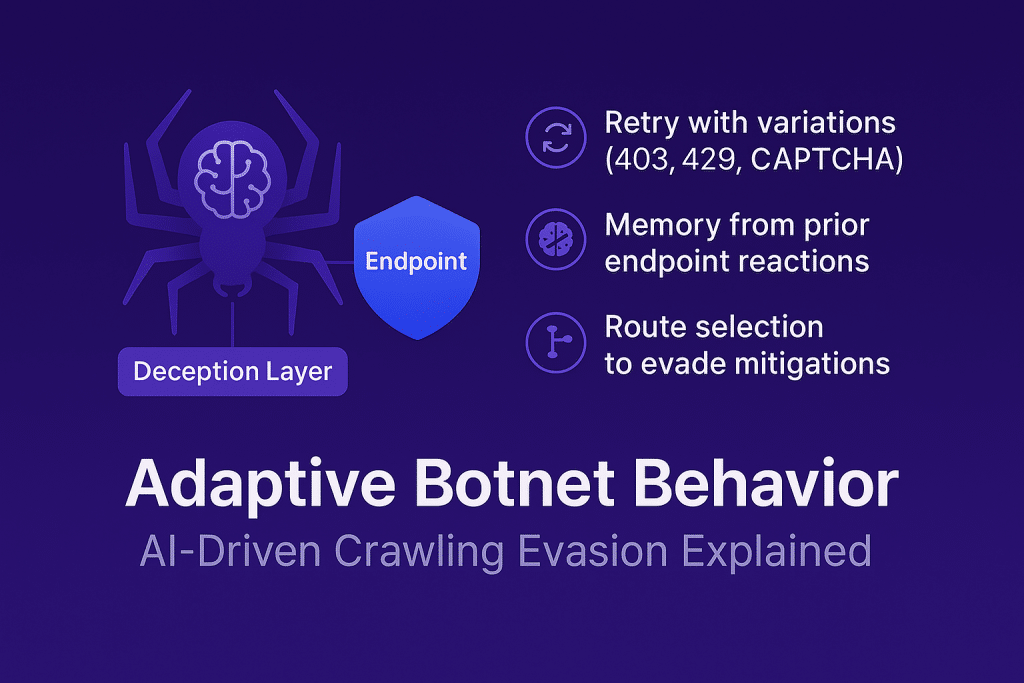

Phase 3: Adaptation – The Feedback Loop

Advanced botnets don’t give up easily. They adapt based on the feedback they receive. If an IP is blocked, a new proxy is used. If a path triggers a CAPTCHA, another path is tried. This ongoing loop of action and correction allows botnets to slowly bypass static defenses.

ShieldsGuard mitigates this with evolving traffic models that assess not just individual requests but sequences over time. Clusters of traffic that show signs of adaptation—such as shifting patterns from the same ASN or rotating identity headers—are isolated and redirected.

Phase 4: Counter-Intelligence – Weaponizing Deception

When certain behavioral thresholds are crossed, ShieldsGuard activates honeypot redirection. Instead of returning errors or hard blocks, suspicious traffic is routed to decoy endpoints designed to contain and study automated agents.

These controlled zones allow continued observation while shielding production infrastructure. In parallel, data from these interactions feeds into global reputation models, enhancing proactive blocking for future requests.

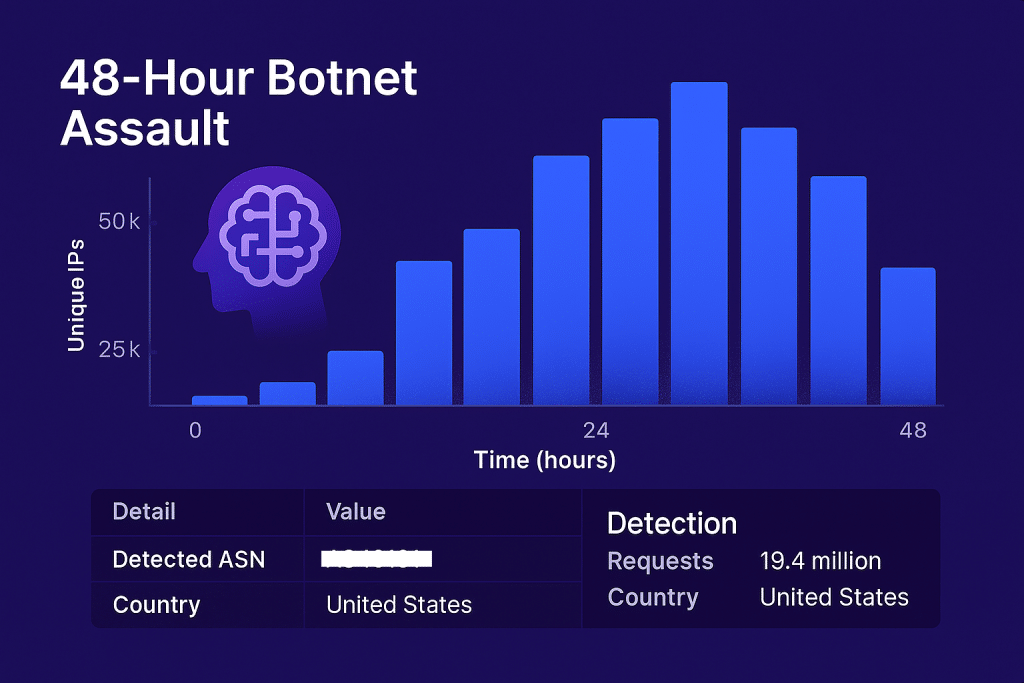

Real-World Case Study: Adaptive Crawler Neutralization in 48 Hours

In early Q2 2025, a fintech customer protected by ShieldsGuard experienced a sustained adaptive bot attack. Over 48 hours:

- More than 28,000 unique IPs were rotated

- The attacker bypassed three commercial WAF solutions

- ShieldsGuard flagged the traffic within 37 minutes using its behavioral AI stack

- 91% of bot requests were routed into honeypot endpoints

- The attacker’s entire probing logic was mapped and mitigated

The attack was fully neutralized without downtime or user friction.

Conclusion

Botnets today are no longer static tools—they are feedback-driven, environment-aware and resilient. Defeating them requires not just strong defenses, but intelligent systems that respond in kind.

ShieldsGuard’s layered defense strategy, built around adaptive deception, behavioral telemetry, and AI-enhanced classification, redefines what modern web protection looks like.

Instead of reacting to botnets, we anticipate them.

And we make sure their learning leads them nowhere.